I have spent a lot of time in academia, and the last four years at commercial startups, including running a few myself. This gives me a special vantage point on upcoming computing areas – I want to jump over what’s interesting now to what will be interesting in 5 years. My current job hunt has been a really great excuse to do an industry overview and talk to everyone; I honestly wish I could do a two-month sabbatical each year and go spend a day talking to every single interesting company or research organization.

In the next decade, the most interesting computing trends to me are:

– Synchronicity and Liveness

– Cyborgized Cognition

– Telepresence Embodiment

I want to work on these problems whether in research, or commercially. If you work in this area and want to just chat, or hire me, give me an email, or tweet.

I’m going to talk about the next decade shortly, but first here are a few trends I participated in that changed significantly over the last decade:

Tabletop Computing used to be a big deal, with the Microsoft Surface, DiamondTouch and interactive wall displays. My Master’s Degree was all about tabletop computing. However we don’t really have anything exciting happening in this area now – nobody does day-to-day computing on or around horizontal surfaces. The only interesting game in town is maybe TiltFive.

Spatial Computing as a term has managed to get out of tech and academic circles and into general discussion. This is great – it’s a useful summary of all the interesting work happening in Computer Vision, AR, VR and Volumetric Capture. Previous attempts to summarize the field fell into useless 360 vs Stereo or AR vs VR vs XR vs “Cinematic Reality” hair-splitting.

Gestural Interaction was how I thought (and many people still think) we’d interact with computers in the future. The idea of implicit input, or that maybe people will learn American Sign Language to talk to computers, is such an enticing idea that it’s drawn too many people in. But gestural interaction has failed to get real traction. There’s just too much noise in real-life gestural environments. Most gestures actively used in hand-tracking UIs are indexical instead of symbolic, and keyboards and voice interaction still being most important.

Deep Learning unfortunately means too many things right now. Anything that involves some statistical analysis is now called Deep Learning. Marketers that used to use SPSS to analyze Likert scores instead use differently-branded software packages to do deep learning, with an unclear change in actionability. Doing an analysis of an image based on edge detection could be construed as using a small CNN, so it is now called Deep Learning, too. I’m not trying to gatekeep, but I do wish that in general people were more specific, and didn’t use an obfuscated deep learning technique when a much clearer tool like linear regression works totally fine and is easy to understand.

Let me tell you what I’m excited about for the next decade of computing

Synchronicity and Liveness

We are so used to the convenience of asynchronous communication that it’s now common to text or schedule in advance of calling, and that calling someone without warning is considered either rude and requires an apology, or a potential emergency. In timezone-distributed tech circles, almost every meeting is a Google Calendar invite. Messages are sent slightly before or after the scheduled time, with either an apology that someone is only slightly late, or seeking confirmation that the meeting is still on. There’s just too much overhead.

To have quality synchronous time, people still fly in to meetings. This has been a bit bizarre during my current job hunt as companies have spent probably mid-five-figures now flying and hotelling me various places, only to have many of my “onsite” meetings happen in a single-person conference room with a remote employee over Zoom.

Slack usage is pervasive at tech companies of all sizes, since instant messaging is better than emails for quick, time sensitive communication. However, if an employee gets overwhelmed with notifications, they tend to turn them off. Incentives for communicating honestly about your availability are misaligned, and in-person cues about availability aren’t properly translated.

In real-person meetings at someone’s desk, you can get a sense of how busy they are, or if they’re talking to someone else that you can interrupt if you need to. Currently our interaction states are binary: I’m in a call, or I’m not. I’m “online” in Slack, or I’m “offline”, and I may have set the “offline” status artificially because I need to concentrate on something and can’t be bugged at the moment.

I hope new research or businesses explore making synchronicity at a distance more friendly. Calendly has been incredibly useful for me to schedule time to speak with recruiters, as it reduces all the back-and-forth scheduling iterations to a single click. I wish when I called someone, and they’re busy, that we could set up a system to automatically initiate a call again when their current call is done, or we’re both free again. Dialup – sort of a synchronous, audio-based social network, is doing very interesting things with calls that aren’t invoked by either callee. There’s interesting research or a product to be done in communicating and requesting availability – this is handled well in-person with body language, open/closed doors, quick, ephemeral interruptions without disrupting ongoing meetings. Computing doesn’t handle this well at all. I almost want an always-on video conference setup that people can request to join, and then a queue if the join isn’t immediate.

When I was running ticketing for The Aluminum Cat, one of the surprises was there was no online ticketing service that handled time zones well. Turns out, most tickets purchased are for in-person events, so this use case isn’t handled well at all. I’d love a company to build an equivalent of Doodle + eBay + calendly for bidding on group activities, where a booking transaction occurs automatically once quorum (financial, headcount) is met.

Cyborgized Cognition

The average person still refers to artificial intelligences, algorithms and machine learning as “the other”. These often feel a lot like “the other” because when we interact with them, they’re run by large, impersonal, companies whose interests aren’t the same as ours. An AI may be branded as a decision engine, but if that’s done by a company that also serves ads, it doesn’t generate great consumer trust that my behaviour isn’t being influenced outside my best interests.

Once we fix this trust problem (which might require fixing capitalism, tbh), we can start by forming better more useful, actionable relationships with AI – a cyborgization.

The AIs I actively engage with most on a regular basis are my Gmail spam/important filters, and my Facebook and Twitter feeds. Training my Gmail spam/important filters feels like gardening, in that I know it takes extra work to mark messages as important or not instead of deleting or archiving them, but I think it will make my inbox more manageable in the future. When I tell Facebook, Twitter or Instagram that an ad or a post isn’t relevant for me, I don’t have the same relationship; because I know these social networks prioritize engagement (ad views) over what I may want. “We’ll show you less of these in the future” is the best possible phrase to describe the change, but it still feels passive aggressive, since we know the feed algorithms aren’t ultimately trying to serve you.

I have enough presence of mind to spend time gardening algorithms, but from when I talk to other less tech-savvy people, they don’t understand (or believe) that algorithms can change. There is definitely interesting ethnographic research to be done on how the average user can be educated about interacting with algorithms – currently they’re treated like omniscient oracles, or malicious agents able to read your mind. I’d also love to have a browser plugin that lets me know when a news feed is changing on me, sort of like sousveillance for A/B testing.

For cyborgized cognition, I think eventually our day-to-day lives will more resemble a continually negotiated tradeoff between tasks your AI handles, and you handle. Cyborg chess is a good model for this. There are startups that use the term “personal assistant” or “concierge” which I feel a bit grossed out by, since I grew up middle-class and those are things that only useless rich people use. I think there will eventually be lots of interesting research and design work to be done in the area of users and an AI negotiating implicit permission and agency, but none of that can be effectively be used in the wild yet until the trust relationship is better handled.

Maybe Apple will figure it out first. Historically, engaging with an algorithm you don’t own with large compute resources has meant that you give up a significant chunk of your privacy, however there’s a recent Apple Machine Learning Journal publication that has demonstrated remote model training that’s privacy-conscious.

Telepresence Embodiment

From spatial computing, and remote presence via drones such as Double, or wall displays such as Tonari, or live volumetric capture such as Mimesys (acquired by Magic Leap), people are going to spend more time interacting with other people and things at a distance with varying degrees of embodiment.

There’s interesting problems to be solved around scheduling, which I already covered in my section on Synchronicity, but embodiment and expression is an interesting area as well.

Embodiment could be for the purposes of self-expression, such as has been common in online games for a long-time. Wearing a different avatar is like simply wearing a mask. To appear older or of a different gender in voice chat, people have often used audio filters. In social spatial computing environments with live motion capture such as VRChat or Rec Room, it could be foreseeable that users may want motion filters – to appear more masculine, or less clumsy, or mobile at all when they may be quadraplegic.

For human-negotiated attention in remote presence, eye contact is important. Pluto is currently exploring using depth sensor data to correct remote eye contact during video calls so that face-to-face eye contact is preserved.

A short demonstration of eye contact with Pluto iOS and a comparison to the standard selfie camera. #arkit #TrueDepth #sharedpresence pic.twitter.com/N9m5ZqKHz7

— PlutoVR (@PlutoVR) June 14, 2019

Update: Attention Correction is included in the upcoming iOS 13:

How iOS 13 FaceTime Attention Correction works: it simply uses ARKit to grab a depth map/position of your face, and adjusts the eyes accordingly.

Notice the warping of the line across both the eyes and nose. pic.twitter.com/U7PMa4oNGN

— Dave Schukin 🤘 (@schukin) July 3, 2019

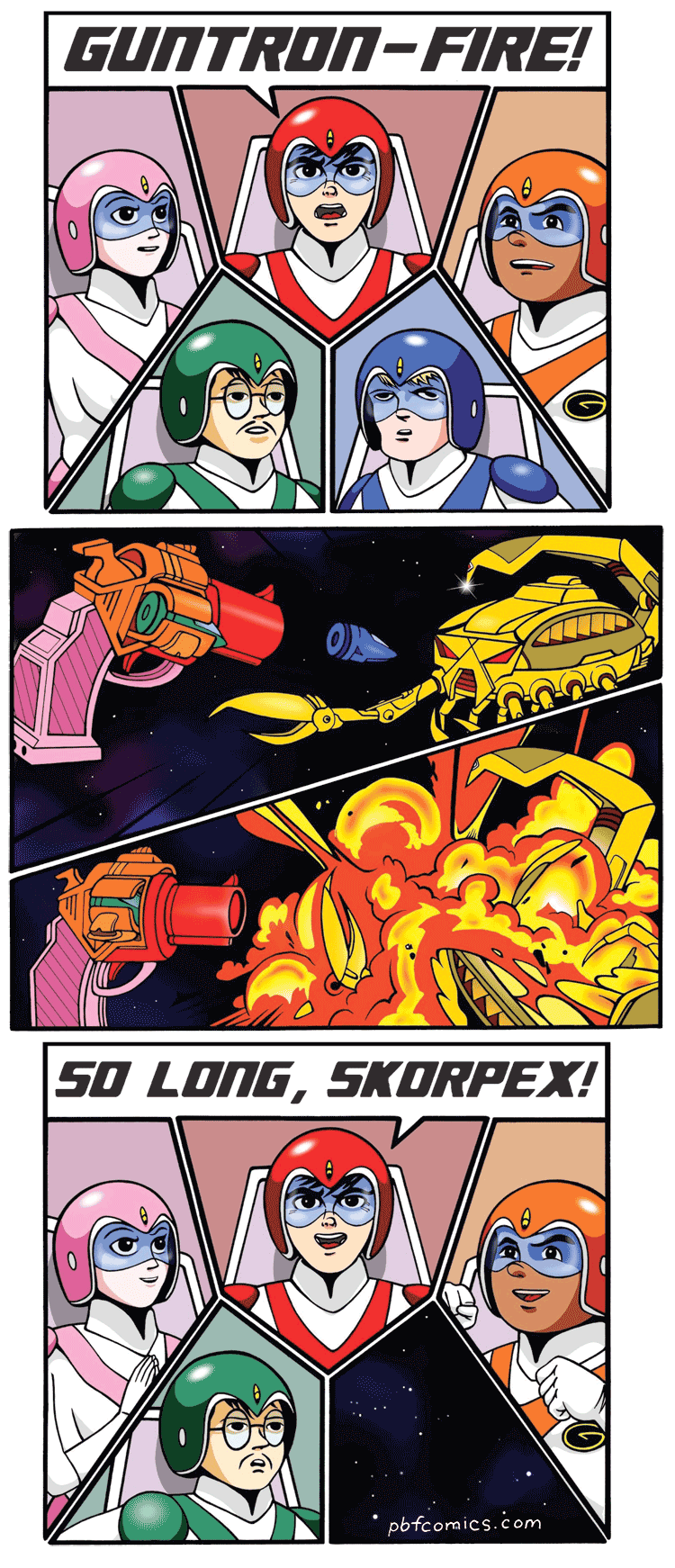

This works pretty immediately in a one-on-one video conference setup, if you want to preserve literal eye contact. But what if you’re in a multi-way video chat, like you’ve just formed Voltron:

In this case, it becomes clear that just faithfully reproducing eye contact is overly literal. If I’m person A, I may be able to see when person B is looking at me, but when person B looks at person C, I may not be able to tell. It may be better to adjust person B’s video, as it appears to person A, so that person B’s eyes are oriented towards person C.

The interesting conclusion here is that with remote presence, there’s many reasons to transmit more higher-fidelity expressions of embodiment, but not to reproduce them literally at the remote end.