-

Let Me X That For You

It happened. I asked a technical question in a forum. A day later, someone I don’t know responded “would this work?”, with a short link to them copying my query directly to a text-based AI. The AI’s response was completely irrelevant and unusable, despite giving the standard “verbose confident technical” aesthetic. 10-15 years ago, it…

-

San Francisco, 2016

It was 9 am-ish in mid-December. I sat in a cafe along Folsom Street in the SOMA neighbourhood of San Francisco. This was the nearest place to me that had caffeine. Scratch that – the actual nearest place was a tiny 24/7 corner store (you’d call it a “bodega” in NYC), and had prepared coffee…

-

Millennial Superconductor

A superconductive material that operates at room temperature (LK-99) has been reported in the last couple weeks. This is not just exciting because of the potential applications, but also the bizarre drama around the release of the news, and the race to independently verify it. This is especially exciting for me personally, since my first-ever…

-

Hyperlexia

Pictured above: A photo of glass door of a cafe in Queens, NY, looking from outside, into the cafe. The door has handles on the outside and inside that are identical, and could be used for pushing, or pulling. On the outside of the door is a decal with cut-out letters that say “PULL”. On…

-

“Billiards, but they’re People”

I just finished nearly a whole month of travel, the last bit of which was in Latvia where I participated in a week-long game jam in a castle. Fellow game dev Charlie Behan made a great mini-documentary of the event. I’ve done a lot of game jams, and with this one, I needed to get…

-

Books Read 2022

The Walrus and The Warwolf by Hugh Cook, i.e. the picaresque story of Drake, shithead pirate teen in a world of decaying magic, was above and beyond the most impactful book to me. Don’t just take my word for it – read China Miéville’s commentary. In non-fiction, The 1619 Project is a must-read to exist…

-

Books Read 2021

I am in the habit of publishing the list of books I read every year. Due to world events, I forgot the last couple years. Here we are now… NK Jemisin’s The Fifth Season and MJ Lyons’ Queer Werewolves Destroy Capitalism were the most memorable pieces of fiction; both felt very human. Eric Tyson’s Investing…

-

Jobs in Industrial Research

This post summarizes advice I’ve given several people who are looking for jobs in industry just after finishing an academic research degree. Here, I want to be helpful by not just getting you any job, but a job that is a fit for you and you excel in. It isn’t the best term, but I’m…

-

MetaMovie’s Alien Rescue

This afternoon, I played in the high-end VR LARP The MetaMovie Presents: Alien Rescue. This was a ticketed show, with multiple live actors, some audience that could speak (like myself), and some audience with free-floating avatar cameras, called “eyebots” with in-world lore. The recording is on Twitch: https://www.twitch.tv/videos/1807898598I chose the alias “In Clutch”, as I…

-

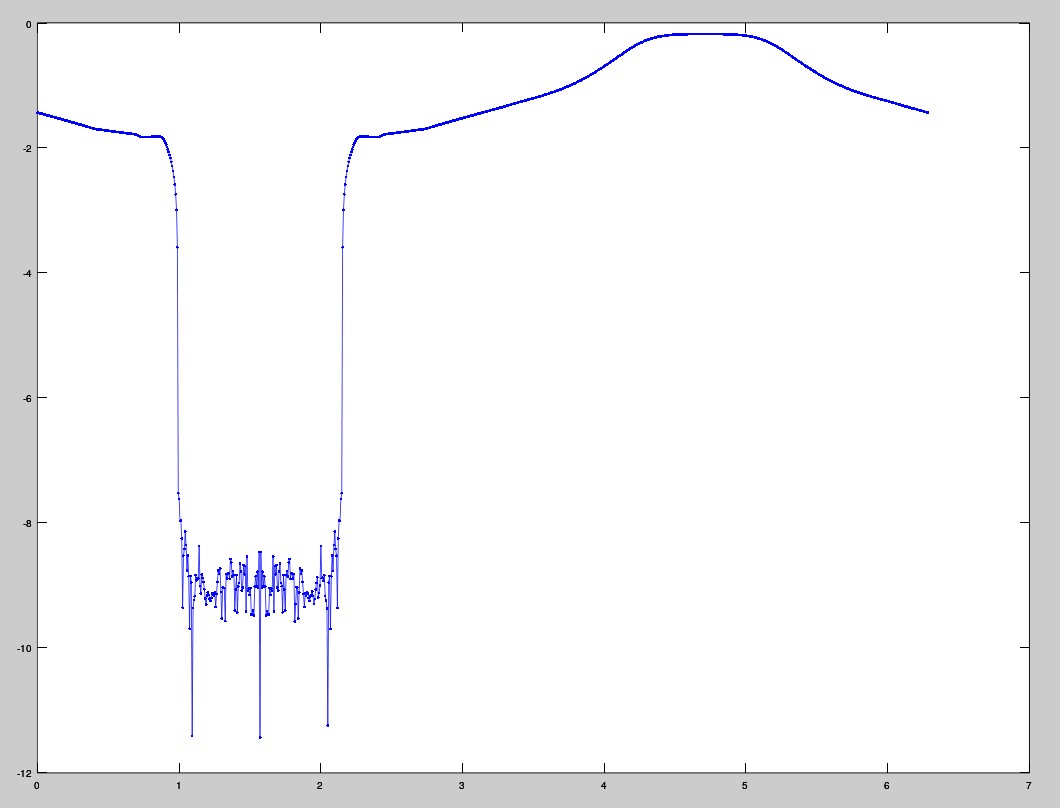

Sabbatical Themes

Late 2022, I quit my job at Meta Reality Labs. I’m not looking for any commercial projects until at least 2024. In academia, a year outside your normal work environment is formalized as a “sabbatical”; this my DIY Sabbatical. Gestural Input, 2008-2023 At Meta, I was working on EMG Input for AR since the CTRL…