-

Books Read 2024

I ended up reading surprisingly few books this past year, as I focused on more tangible sabbatical projects. A few of these books I read async with a group of people; I’m excited to share how to do this well in the future. For my favourite book, it’s a toss-up between The Taming of Chance,…

-

Effective Playtesting Rituals

Every community of creators should have a regular playtest series. This is true whether it’s an ad-hoc community of independent creators, an academic research group, or a large corporation. If there isn’t a playtest series, you should start one. This post is for you. I have been involved in several recurring playtest series. I first…

-

Lifesteal on a Technicality

I am playing Marvel’s Midnight Suns. Like any big modern game, it is a sloppy mess of systems, for which the balance and neatness of design doesn’t matter as the point is the joy of the complex systems smooshing together. Many characters can take actions to add the “Lifesteal” modifier to attacks. Traditionally, Lifesteal (or…

-

The Phoebe Strategy

Based on recent events, I propose that we should all be aware of a new meta in psy-op strategies… Obviously, no sensible person believes that JD Vance had sex with a couch. The humour of this claim, fair or not, is about whether one can argue that “he seems like the kind of guy that…

-

Temporal Liminality

This week, I’m staying at a house in the middle of nowhere in Northern Arizona, after a visit to Las Vegas. When I walk from the upstairs bedroom down to the ground floor kitchen, the time zone changes. This is reproducible, reliably. It took me a couple days to notice, since the purpose of this…

-

The Year of 14Whatever

Imagine the TV show Connections, but it’s a stream of consciousness series of errors because I’m an impulsive idiot. I’d call it Missed Conceptions. 1491, a book that came out in 2006, is a reframing of what the Americas (continents North and South) were like before Columbus arrived. Contrary to general colonist perception, the people…

-

Books Read 2023

Looking through this list, I read an incredibly good set of books this year. In each of my annual “books read” posts I try to pick the best or most impactful one, and this year is very hard. It’s the first full year of my sabbatical, so I delved deeper into non-fiction topics, many of…

-

The Nose: A New Immersive Theatre Show

This fall, I’ve been working intensively on directing and producing a new immersive theatre show out of The Lower Case, called The Nose. The full title, in true style is, The Nose: A Full and Complete Explanation For The Events of Nov 14-16, 1983. The show opens tonight, and runs for all of January. Grab…

-

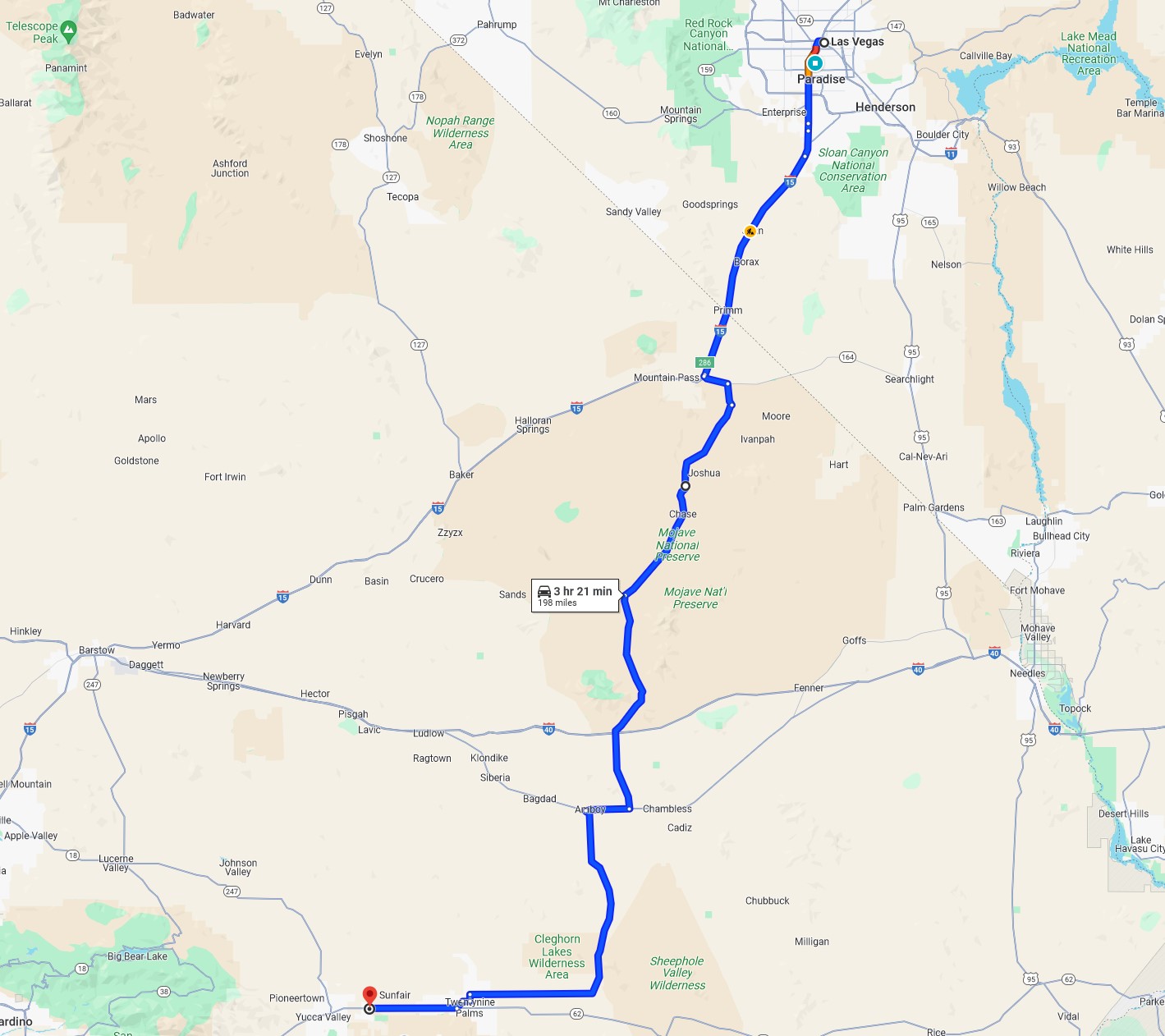

Music for a Desert Drive

I’m visiting Joshua Tree in late December. I delayed buying the flight more than I should, and by that time a flight directly into Palm Springs was expensive. The backup plan was to fly into Los Angeles, but this would lead to an slogging drive across legendary urban sprawl in the middle of holiday season…

-

Support Hot Potato

Text I wrote from an email nearly two years ago, after a corporate re-organization: Here is a common scenario at a megacorp, a glimpse into “real programming”. Group A (imagine they’re the point-of-view character in this story) makes a software component, and it is widely well-received. Group B hears about it and wants to use…