Near the end of the first semester of my Master’s, my research topic was becoming clearer – along the lines of “teaching the use of gestural interfaces”. This was motivated by the proliferation of gestural interaction in devices of many form factors. Much of my thoughts on this were driven by my improv and theatre background, especially with respect to miming and mimicry. After some emails were sent, and calls made, I have been hired an an intern at Microsoft Research in Redmond, Washington from January to April to work with the Microsoft Surface team on teaching gestural interface use, and to measure how well a user has learned. With that in mind, here’s the courses I took last semester and projects I did.

Computational Biology

This course takes problems solved in computer science and maps them onto problems in biology that are becoming harder as more data becomes available. I initially only took this course because I am required to take “breadth” in my courses, but it turned out to be pretty enjoyable. I was part of a sub-group that examined current research into codon bias. My final project was on modifying a gene from one organism to another to make it perform better, with respect to codon bias. I wanted a picture from each course I did, but since I don’t really have a good one for Comp. Bio., here’s a bunch of completely unexplained equations I made for my final project.

Ubiquitous Computing

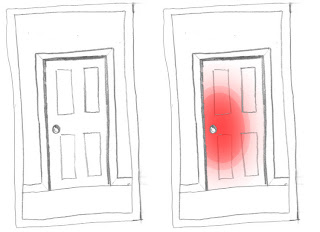

Ubicomp helped me get more coverage in the literature of my field, although more on the ubiquitous, rather than interactive, side. I was interested in ambient displays, and my project partner was interested in household devices, so we came up with the humourous yet sincere project title: “Devices that Bruise: Battered Device Syndrome”. The idea was to give everyday items the ability to give feedback if they received damage, either causing immediate harm, or causing harm if this behaviour was continued in the long run. Below is what we imagined a door would look like if it was slammed shut.

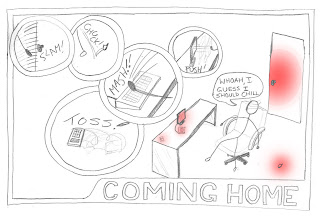

And here is a storyboard of some devices that have been “bruised”.

In addition to giving the user’s feedback about unintentional misuse, these devices could let you know when you are in a bad mood, as the user above realizes. This is much like a good friend would alert you to your mood. For our prototype, we wired an accelerometer up to some LEDs, which isn’t visually interesting enough for me to show here. One concern with this sort of behaviour feedback is that it might encourage the inverse response to what we are looking for, like in the art project love hate punch, which was one of our inspirations. User testing would have to be done.

In addition to giving the user’s feedback about unintentional misuse, these devices could let you know when you are in a bad mood, as the user above realizes. This is much like a good friend would alert you to your mood. For our prototype, we wired an accelerometer up to some LEDs, which isn’t visually interesting enough for me to show here. One concern with this sort of behaviour feedback is that it might encourage the inverse response to what we are looking for, like in the art project love hate punch, which was one of our inspirations. User testing would have to be done.

Machine Learning

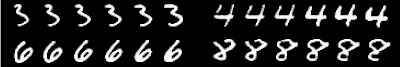

This was mentioned in an earlier post. Upon learning about the ability of Restricted Boltzmann Machines, which are pretty good digit classifiers, to generate new digits, I immediately wanted to apply it to HCI somehow. These animations were a large part of my inspiration. Given a user’s ambiguous digit, I wanted to show a user how they could improve their writing to make it easier to recognize by the device. The philosophy here isn’t to show the user the “perfect” digit, but rather to show how their given digit could be improved. Personalized feedback. I was afraid that the animations wouldn’t be smooth enough to be pedagogical, but my professor said he would be surprised if they weren’t. It turns out that the animations were very smooth, but did not always work perfectly. Below shows examples of digits that were improved, starting with the original digit at the left and evolving right.

For the above digits, the classifier can easily tell what the digits were, but improvement is still possible. However, for some digits in the dataset, it isn’t really clear what was meant, and feedback on how to disambiguate towards either alternative must be shown. Below, we see an ambiguous digit in the centre. To make it a better 1, the evolution goes left. To make it a better 2, the evolution goes right.

For the above digits, the classifier can easily tell what the digits were, but improvement is still possible. However, for some digits in the dataset, it isn’t really clear what was meant, and feedback on how to disambiguate towards either alternative must be shown. Below, we see an ambiguous digit in the centre. To make it a better 1, the evolution goes left. To make it a better 2, the evolution goes right.

Although it doesn’t look that cool, I’m pretty exciting by these results. Classifiers in machine learning haven’t been used in this way before, and I would like to think of more interactive ways to apply them. Generally, Artificial Intelligence and Human-Computer Interaction are not as closely related as they should be, and I think they should be if we want to makes computers worth caring about.

One response to “Where I'm going, where I've been”

Hi, that’s a really good post. Definitely enjoyed reading this. Thanks