It’s been a while! Lots of excitement is upcoming and I’m happy to finally share.

At the end of April 2016, after a year and a half, I left Occipital and returned to Toronto to focus on personal projects. I had contemplated this move for a while, and the timing just happened to work out. I had worked on some really amazing things at Occipital, including building out access into deeper features of the Structure SDK, increased Unity integration, and the early parts of the upcoming Bridge Engine for Mixed Reality.

Transitioning from a life in academia to professional software development is a big change. Almost all the software I’d written by the time I started at Occipital in late 2014 was for only me, and for a demo that I’d be present at, in person, in case something went wrong. At Occipital, I had to massively professionalize, and not just write code that exposed new features and interaction models, but also robustly work across several devices while being maintainable. I’m saying the above as both a warning for other academics that join industry, but also as a call to adventure! More academics should spend time in industry! I have since looked back at code I wrote while still in academia and it’s often embarrassing; the worst parts are over-rushed and, paradoxically, also over-designed. I am now a much more well-rounded programmer.

The more time went on, the more I found myself getting involved in extracurricular projects. These took up more and more of my resources – temporal, mental, emotional, etc. and I eventually realized that these all fit into a single theme. While previously, I saw myself as an interaction designer for novel interfaces, what I really, truly, deeply cared about was building novel participatory digital theatre-like experiences. With the magic of hindsight, one can see how the latter is a more fully actualized version of the former. At Occipital, I was working on a very powerful platform for other people to build content on. I realized it made more sense for me to be building the content myself, in ad-hoc collaborations as suited to a given project, rather than as part of a persistent company. At least for the next little while.

So, I quit Occipital, exercised all my options, then, due to being on a TN Visa and Toronto being much cheaper than SF, I moved back to Toronto. I established a sole proprietorship to do contract work, and made sure the keeping-me-afloat contract work never exceeded 50% of my time.

PLAYLINES

I met Rob Morgan at GDC 2015, where he styled himself as a video game writer for Virtual Reality. We chatted back and forth over the following year. When he saw Josh Marx and I’s project The Painting, he ecstatically recruited me to do something similar in the UK. And thus arose Coming Out, a locative audio narrative experience about the Future of Love, wonderfully digital and queer. This project was sponsored by the UK arts organization NESTA, and I got to fly to the UK twice over the summer to run a preview version at a the Last Word festival, and then do an in-situ installation for where the final project will take place, during FutureFest September 17-18. Rob and I iterated extensively on the UX and the wording of the script; one intentionally unusual aspect of this project is the user is not required to look at the screen at all – it’s meant to be freeing from our usual daily cellphone-focused experiences. An iBeacon cannot detect where someone is looking, so Rob has to write words, and I have to write robust software, so that we can subtly guide people around as if they’re listening to someone monologuing to them over a phone. We have excellent sound editing and voice actors, so I got to listen to lots of swoony British accents.

Post-FutureFest, we’re looking to develop this format further, and are doing so under the label Playlines, a portmanteau of play and leylines. There’s at least one possible locative project in the pipe coming in the next year.

RAKTOR

I met Jasper de Tarr through Kinetech nearly a year and a half ago, and we kept talking about doing theatre in virtual reality. What would it look like? Would everyone be in VR headsets (no, why would you need to be in the same place then)? We could have done anything at all, and it would count as VR theatre since no-one else was doing it. We ran several small performances featuring a single audience member in a VR headset, and everyone else around them. It worked surprisingly well. We then did a larger-scale performance for DorkBot at the Grey Area, and this coming Tuesday, our 50 minute show is debuting with the San Francisco Fringe Festival. I don’t want to spoil too much, as I want you to see it. I will say that there are a couple VR headsets, and some of the audience spends time in them. This is our next iteration in meaningful VR Theatre interactions, and I’d love for you to see it and hear what you think about it. Say you’re going on the Facebook event here!

Right now, Raktor thinks of itself as an art collective, not a company. Every VR meetup we go to, we have to re-explain this to the deluge of VR startup folk – no, we don’t have VC funding, no we don’t have a 10x plan, we are simply very competent and experienced participatory improv theatre artists who also can build and run a futuristic digital show that isn’t a half-assed “X for Y” like most of the other stuff you see. Raktor takes its name from a short passage in Neal Stephenson’s Diamond Age, describing audience interaction with traditional theatre a century hence.

We have a Mixed Reality Live VR show in the prototype phase. This has taken a sideline as we’ve focused on polishing the Fringe show, but look for it again in the following months. We’re currently hunting for a dedicated venue in the Bay Area where we can set up a Vive, green screen, and host several audience members in person. Our intention for this show is to have half of its audience members in person, the other half coming in over Twitch or streaming service (highly suggestive hint – contact me if you have a streaming service that wants to work with us). We care a lot about meaningful interactions between the performers and the live and remote audience. There’s no point in live-streaming our show out to the internets unless we can figure out a way for those folks to meaningfully interact. At this stage, we’d rather get 10 meaningfully-interacting audience members than get really popular and get 10,000.

Most Mixed Reality VR videos use a colour camera with a known position relative to the VR rig and a green screen behind. While this works okay, it has two frustrating problems:

(1) props in VR that the performer might manipulate have to be explicitly declared as foreground, and the system chooses whether to render them behind or in front of the performer based on an arbitrary threshold, like the position of the performer’s head. This is broken for multiple performers, and has all sorts of other awkward cases if you’re doing any sort of performative prop manipulation.

(2) the system does not know the pose of the performer, merely which pixels the performer occupies.

The solution I prototyped this summer is to use a Kinect 2 to both determine the performer foreground and track the other skeleton. I’ve seen other people use this technique as an alternate to a green screen, but I’m pretty sure I’m the first to use it to replace the live person with a rigged virtual avatar. Check it out here, where I have a magic hat I can put on to change me from a performer to a virtual character:

Current weak points of this approach:

(1) The Kinect 2 has a low colour resolution at the scale you’d want to do a multi-person performance at.

(2) A single Kinect captures only one side of the performer and they will occlude performers behind them.

I’d love an approach that solves these problems, and if I can’t find one (please contact me) I’ll put one together myself if I have to, dammit.

The above Kinect 2/Vive Mixed Reality setup was put together in Toronto’s fantastic Art Incubator/Performance space Electric Perfume. They had all the hardware, and also purely white walls. As I have a background in theatre and installation art, I like to think of them as a white-box theatre space.

For Raktor, one of the other show-running tools we wanted was a live set designer someone could use from off-stage. I pictured this running on a tablet, giving an interactive top-down view of the small stage area. At around the same time, Henry Faber and TIFF approached me to do an installation for TIFF’s entirely sold-out POP series. TIFF is taking a pretty cool approach here. As someone who’s effectively been embedded in VR development for 2 years now, it’s good to be reminded that almost everyone else knows almost nothing about VR. In the normal installation art approach, you can visit the install space at any time over multiple weeks; it’s usually unattended and broken. Instead, TIFF had 3 time-ticketed intensive weekends showing off new VR platforms, with a staff attendant at each station trained in running them. This ensured that each station was non-broken, and also that people who had never worn a headset before and didn’t know what was going on could be hand-held through the experience.

For TIFF POP 3, I adapted the live set designer idea into Inverse Dollhouse, a 2-player asymmetric VR experience where one person is wearing a headset and passive, and the other is on a tablet viewing them from top-down and active. The person wearing the headset is the “doll” in their dollhouse, and the top-down person is playing with them in their dollhouse, arranging furniture around for them. I retroactively decided that this was inspired by that other piece of Canadian content, The Friendly Giant.

Raktor also uses the technology from Inverse Dollhouse in our upcoming Fringe Show.

IMPROV REMIX

I got to present the paper that came from my PhD thesis in Brisbane, Australia for DIS 2016. This 10-page paper is a short summary of the whole work, but as a recovering academic (will write more about that at some point), I must urge that you read my 180 page thesis if you really want to have a hope of understanding what the hell I was going on about.

The technical director for Improv Remix’s live performance, Montgomery Martin, is in the midst of a really cool thesis himself where, to put words in his mouth, he’s looking at software production practices and how they empower yet constrain modern theatre. He also works on Isadora, which is sweet. And he’s competent and nerdy, too.

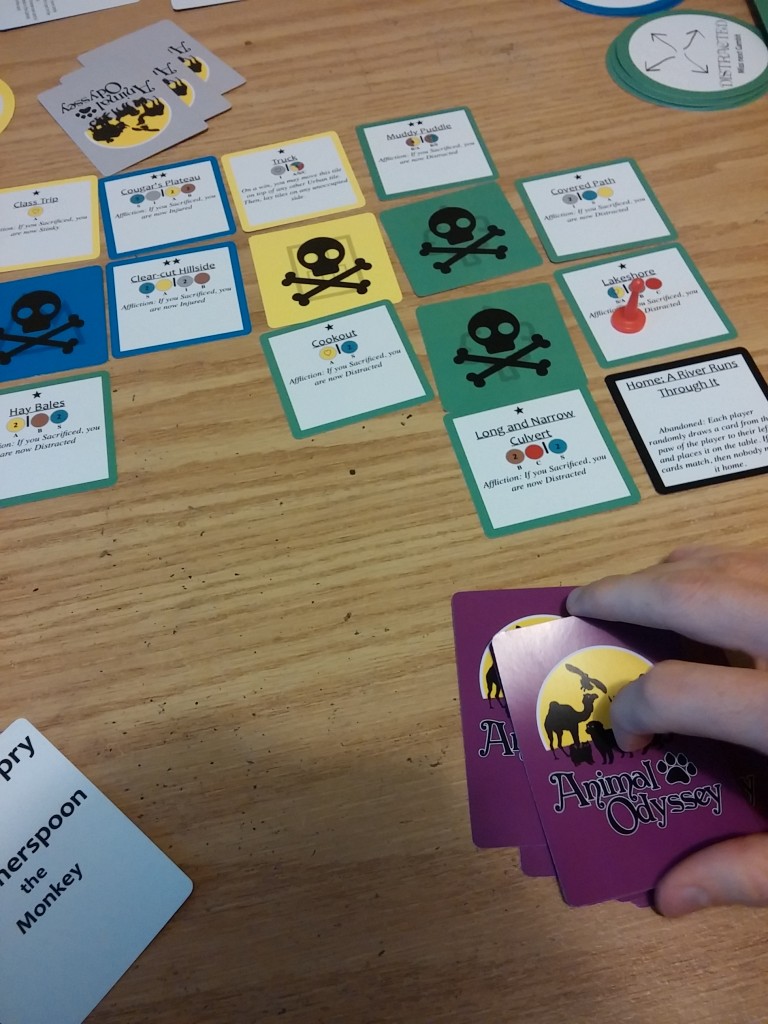

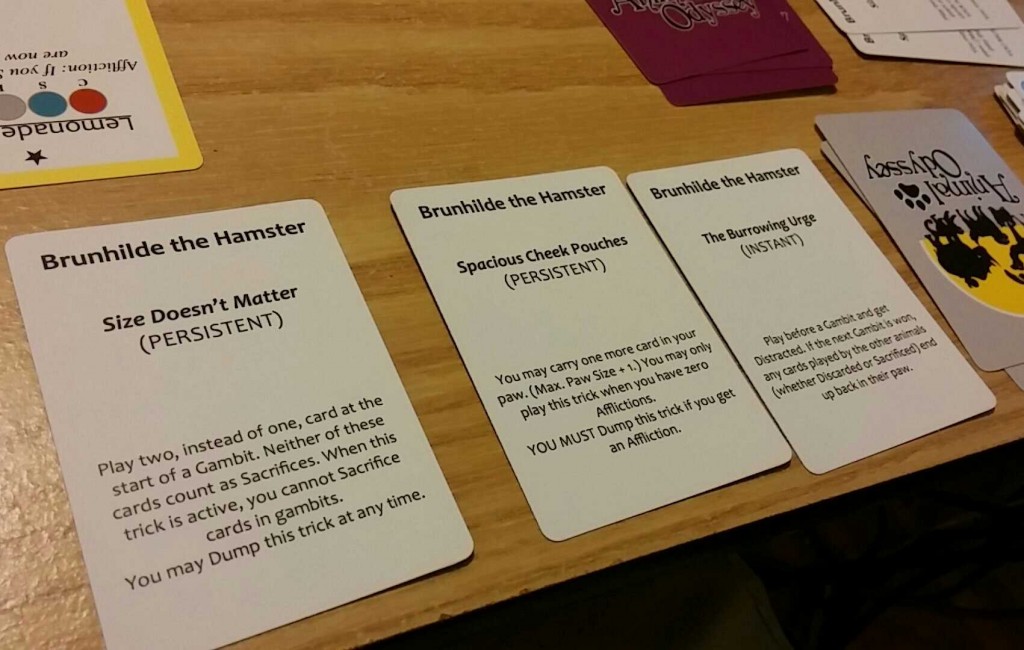

Animal Odyssey

I haven’t talked much about this publicly, but for the past nearly three years, I’ve been working on a board game with former housemates Cian Cruise and Jan Streekstra. I can’t quite remember how it started, but now we describe it as a cooperative multiplayer animal adventure game, as inspired by the movies of our youth – Milo and Otis, Homeward Bound, The Land Before Time and All Dogs Go To Heaven. One of our clever innovations is dealing with the problem that cooperative board games have where, if everyone knows everything, such as in Pandemic, then one person tends to take over for the group, and it excludes everyone else. The game is hijinx and surprise-heavy, like a rogue-lite we hope any group plays a couple times in a session. We playtested the latest, printed version at ProtoTO and even got to demo it to some people from Hasbro. Conceptually, the game is already hella tight, and we’re polishing the heck out of it at a steady pace.

DEVELOPMENT

At Occipital, my software development was primarily in C/C++/Objective-C, with occasional work in Unity C#. When I switched to personal prototyping projects over the summer, most of these were in Unity. Unity is great for throwing things together quickly that will Just Work (TM), and its multi-platform support manages to bluntly function surprisingly well, but it definitely feels like I’m on training wheels mode. I can feel my deep programming skills atrophying slightly, to the benefit of getting content out more quickly, yet with less flexibility. I would love to hear what other programmers’ experience has been switching from C/C++ to Unity C# as their primary language. When you’re doing low-level rendering work in Unity, such as I was doing at Occipital and for other contract work this summer, its closed-source nature and big architectural changes between versions can be crazy frustrating. I have almost considered doing most of my development work in a native plugin, while treating Unity as a high-level rendering and logging wrapper.

I moved back to SF this time around to do the Raktor Fringe show, and I was very fortunate to find a contract day job for at least the next 3 months that is flexible enough to let me run errands during the day for side projects. I’ll say more on it later, but it’s managing to meld my love of recombinant content, artificial life, and experiences playing Dwarf Fortress…

One response to “What I’ve been up to”

[…] I moved out of SF and back to Toronto at the end of Dec 2016. I had decided to pursue a Green Card (permanent residency) in the US, and in consulting with a lawyer, determined the fastest way to do so was to manage the process by myself while doing consulting work, instead of with a fixed employer in the US. I made the choice to do this, sending the lawyer his deposit, the day before Donald Trump was elected. I still consider this a bait-and-switch on America’s part. The morning of the election, me and several friends sat in silence in the now-closed DNA Pizza location. The morning after, I went to the same audiophile cafe, and I’ve never experienced such caring eye contact from strangers as everyone silently checked in. For my last two months in SF, I rented one of the garage spaces on the first floor of the building I lived in, as a live mixed reality production space for Raktor, my foray into live VR performance and concentric levels of audience participation. […]